We kicked off 2026 with our first webinar of the year, Beyond Defect Detection: The New Rules of AI Quality Control in 2026. The goal was simple. Look ahead at what is changing in machine vision, and talk honestly about why so many AI inspection projects still struggle to move beyond pilots and into full scale production.

If you have tried to deploy AI inspection on a production line, you have likely felt the gap between what the market promises and what real deployment demands. The webinar focused on that reality check and the trends that are emerging to fix it, especially the data bottleneck.

Watch the full webinar recording

Why “defect detection” is an incomplete way to think about machine vision

Early in the webinar, we revisited a common misunderstanding. Many teams hear “AI inspection” and immediately compress the entire category into one mental model: defect detection.

In practice, quality control vision systems are rarely one single task. They are a bundle of tasks that work together, for example:

- Component verification

- Counting and presence checks

- Dimensional and measurement checks

- Surface anomaly detection

- Rule based conditional logic that combines multiple checks

Defect detection is part of the picture, but it is not the whole system. Treating it as the whole system often leads to deployment plans that underestimate what it takes to reach production reliability.

If you want a grounded overview of how automated inspection fits into manufacturing workflows, this earlier guide is a helpful refresher:

https://zetamotion.com/a-practical-application-of-ai-for-manufacturers-automated-inspection-qc/

The promise, and the reality check manufacturers hit when scaling

The promise of AI quality control sounds like an obvious win. Reduce waste, reduce manual inspection load, free up skilled labour for higher value work, and catch issues earlier.

The reality is that scaling is where most teams get stuck.

In the webinar, we described three bottlenecks that show up again and again:

- Data

- Manpower

- Time

Off the shelf tools often suggest setup is quick and straightforward. But the hard part is not installing software. The hard part is building an inspection system that remains reliable when:

- Products are noisy, non uniform, or visually variable

- There are many variants on the same line

- Defects are rare or inconsistent

- Conditions are not lab perfect, lighting shifts, orientation shifts, background shifts

This is the point where a deployment starts to feel less like a production project and more like a research project. You get “something working,” but it is not robust enough to scale.

The trap of “you can get started with 10 images”

A key discussion in the webinar was the difference between getting started and achieving production performance.

Yes, many tools can train a starter model with small numbers of images. But teams often report the same curve:

- You get to around 80 to 85 percent accuracy fairly quickly

- To push beyond that, you need far more data

- Rare defects are not frequent enough to collect quickly

- You wait weeks or months to gather enough samples

- Then you repeat the cycle for each variant

The data requirement also grows with complexity. It is not just “100 images of a defect.” It is “enough images of that defect across the variations you will see in production.”

This is the core scalability issue. It is not that AI cannot work. It is that the data pipeline for inspection has historically been too slow and too manual.

The 2026 trend that matters most: solving the data bottleneck

When we looked ahead to 2026, one theme dominated: the industry is converging on ways to reduce the dependence on large, labelled, real world defect datasets.

That is why synthetic data is becoming central to modern inspection workflows.

Synthetic data is not just augmentation. It is a way to create controlled, scalable training sets for defects, lighting, texture, and environment variation without waiting for defects to occur naturally.

If you want a clear definition and examples, this page is a strong starting point:

https://zetamotion.com/synthetic-data-for-quality-inspection/

Synthetic data has evolved through three eras

In the webinar, we outlined a useful progression of how synthetic data approaches have evolved:

1. Classic augmentation

This includes flips, rotations, and lighting tweaks applied to existing images. It can help, but it rarely solves true scarcity.

2. Simulation and digital twins

Teams build virtual representations of products and environments and render images. This can be very effective, but it often requires significant setup time, specialist skills, and investment.

3. Generative AI for synthetic inspection data

Generative models have improved quickly over the last couple of years. The opportunity now is not just image generation, but reliable, controllable generation that can produce curated datasets for training inspection models.

The hard part is reliability. Generative models are powerful, but manufacturers need consistent outputs, not surprises. The trend in 2026 is that more systems will focus on controllability, fine tuning, and workflows that include human verification.

Edge AI is converging with synthetic data

Another 2026 trend discussed in the webinar is the practical shift toward edge inference and on premises deployment.

For many manufacturers, inspection systems need to run without relying on cloud connectivity for latency, uptime, or data security reasons. The good news is that hardware progress and deployment tooling are making on premises AI more accessible than it was a few years ago.

In other words, the future is not “cloud only.” It is flexible deployment where training and inference can be adapted to the constraints of the facility.

Zetamotion’s Spectron overview includes a clear summary of why on premises inference matters for manufacturing workflows:

https://zetamotion.com/spectron-overview/

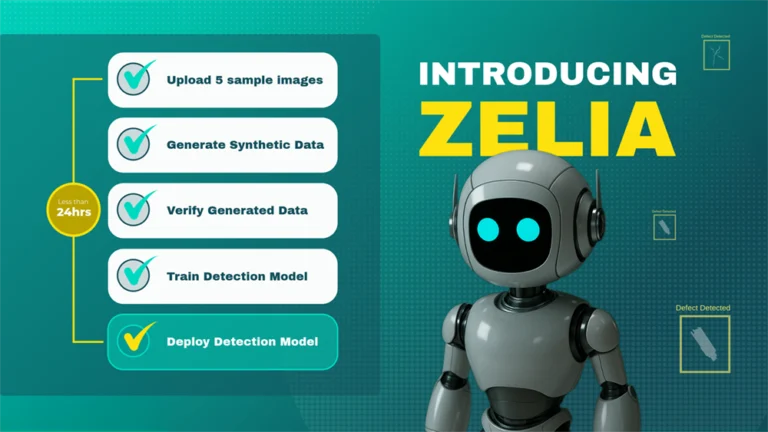

Introducing ZELIA: an end to end learning and inspection assistant

During the webinar, we also launched ZELIA, Zetamotion’s End to End Learning and Inspection Assistant.

The idea behind ZELIA is straightforward. Most AI inspection pain comes from the tedious, expertise heavy parts of the workflow:

- Collecting data

- Curating data

- Labelling or creating masks

- Training and retraining models

- Tuning parameters

- Repeating the process for each new product or variant

ZELIA is designed to compress that workflow into an assistant driven process that behaves more like collaborating with a human inspector.

In the webinar, we described it as a Star Trek style interaction:

- Describe what you want to inspect

- Share a small number of example images

- Verify that the system understood the target

- Then receive a trained model ready for deployment

You can read more and request early access here:

https://zetamotion.com/zetamotion-end-to-end-learning-inspection-assistant/

How ZELIA works, in the workflow shown in the webinar

The demo walkthrough in the webinar explained a step by step pipeline that can be used either through a guided interface or a chat style interface.

Step 1: Upload clean samples

You start by uploading around five to ten clean images of the surface or product you want to inspect.

Step 2: Generate synthetic clean variations and verify

ZELIA generates a large set of clean variations, for example around a thousand. A human then verifies the generated data quickly by keeping realistic samples and rejecting unrealistic ones.

Step 3: Upload defect samples and optionally mark defect regions

You upload around five defect examples. For best accuracy, the workflow includes an optional step where you outline the defect region on each sample, so the system learns what you are targeting.

Step 4: Generate synthetic defect variations with masks and verify

ZELIA generates a synthetic defect dataset along with masks, then you verify quality. If a generated mask or sample is not correct, you reject it.

Step 5: Train a detection model

Once clean and defect datasets are confirmed, ZELIA trains a detection model. In the webinar, we described this as the fastest step, potentially around 30 minutes, while data generation can take longer.

Step 6: Test, verify, retrain if needed

You test the model with samples. If results are not acceptable, you can regenerate data, adjust selections, and retrain.

The headline outcome discussed was speed. A full workflow can complete in hours rather than months, and even with thorough verification, it is realistic to get to a robust model within a day depending on hardware and review depth.

Questions from the webinar that reveal where the market is heading

The Q and A section of the webinar was useful because it surfaced the real concerns manufacturers have when evaluating AI inspection.

Complex geometry and 3D parts

A question asked how the system copes with more complex geometry. The answer was that 2D and 2.5D are easier, but 3D is possible, and reconstruction can be done from images in some cases. If CAD is available, it can speed things up.

This reflects a broader 2026 reality: geometry complexity is still a boundary condition for many systems, and hybrid approaches will matter.

Human effort required to verify synthetic datasets

Another question asked how much time a quality team needs to spend verifying synthetic images before training.

The webinar answer framed it as a quick human pass, like swiping through images, often around an hour depending on the dataset and thoroughness. Verification is an important guardrail. The point is not to eliminate humans, but to use human judgement efficiently.

The defect consensus problem

A manufacturer asked how to handle the common situation where multiple operators disagree on what counts as acceptable.

This is one of the most practical issues in quality control. In the webinar, we discussed using consistent inspection conditions and structured review workflows so teams can align on definitions, thresholds, and measurement rules. Once consensus is reached, the system applies it consistently.

This is also where feedback loops become critical. Human in the loop feedback lets teams correct disagreements and evolve the inspection definition over time.

If you want to see what feedback loops and reporting look like at platform level, this Spectron page is relevant:

https://zetamotion.com/platform-configuration-reporting/

Maximum inspection window size

The answer highlighted that inspection window scale is often constrained by hardware. Very large parts can be covered by stitching multiple camera streams, but microscopic constraints involve optics and capture limitations. Hardware innovation is moving quickly here, and software needs to plug into that progress.

A practical takeaway for 2026: treat inspection like a system, not a model

If there is one message to take from the webinar, it is this:

The future of AI quality control is not a better single model. It is a better end to end system.

The winning systems in 2026 will reduce time to value by solving the data bottleneck, supporting rapid onboarding of new variants, enabling edge deployment when needed, and keeping humans in the loop where human judgement is essential.

That is what “beyond defect detection” means in practice.

If you want to explore the broader inspection stack and where synthetic data fits, these two pieces are good next reads:

https://zetamotion.com/synthetic-data-vs-real-data-in-quality-control-which-is-more-effective/

https://zetamotion.com/where-synthetic-data-for-automated-visual-inspection-systems-truly-shine/

And if you want to request early access to ZELIA, you can do that here:

https://zetamotion.com/zetamotion-end-to-end-learning-inspection-assistant/