If you have ever tried to get a computer vision or AI inspection system working on your line, you know the truth: it is a lot harder than the glossy brochures suggest.

Vendors often promise turnkey solutions where you mount a camera, train a model, and watch defects get caught automatically. In reality, engineers and QC teams quickly discover that these systems are anything but plug and play.

The Data Mountain Nobody Talks About

One of the biggest complaints we hear from engineers and inspectors is about data. It is not unusual for conventional AI inspection projects to require 10,000 or more sample images just to cover one product type. And those images do not magically teach the system. Each one has to be labeled by hand: where the defect is, what kind it is, and whether it is acceptable.

That is thousands of hours of tedious labeling work before you even get to the point where the system is usable. And if your product line introduces a variation such as a new SKU or a different surface texture, you are back at square one, collecting and labeling another massive dataset.

The Manpower Squeeze

Even once a model is trained, keeping it running is not trivial. Engineers spend weeks or months tweaking lighting setups, adjusting parameters, and retraining models whenever the production environment changes. It is like trying to keep a Tesla in full self driving mode on roads it has never seen. The system just does not know what to do with the unexpected.

Unlike people, who can adapt when they see something slightly new, AI inspection systems cannot imagine variations. They need to be spoon fed examples for every scenario. That is why these projects so often turn into resource black holes, tying up experts who could be focused on improving processes instead of labeling defects.

The Defect Consensus Headache

Even defining what counts as a defect can become a battle. Is a bubble under 0.5 mm acceptable, but a bubble at 0.6 mm a fail? Where exactly do you measure it from? Shops and their customers often go back and forth on these questions, creating confusion and slowing down implementation.

Without a clear consensus, AI systems get stuck, producing inconsistent results and undermining trust in the whole setup.

Time, Money, and Frustration

All of this adds up to one thing: time. Getting a conventional AI inspection system from a promising demo to production ready often takes months. In the meantime, engineers are stuck babysitting a system that was supposed to free up their time. Costs mount, frustration grows, and ROI slips further away.

Why Synthetic Data Is Taking Over

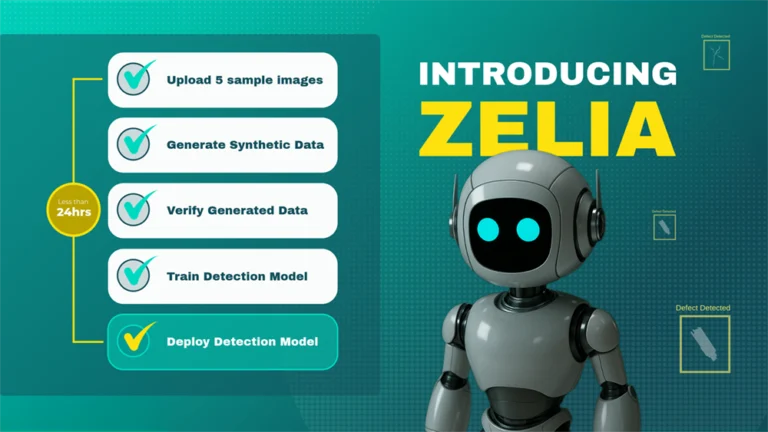

This is why so many in manufacturing are now looking beyond conventional AI inspection approaches. Instead of spending months collecting and labeling rare defects, synthetic data generates the variations and examples needed to train an AI system at scale.

Think about how a human learns. You do not show a new QC trainee 10,000 labeled images. You show them a handful of real parts, explain the rules, and they can extrapolate to variations they have not seen yet. Synthetic data works the same way, capturing the essence of the product and generating the countless variations an AI system needs to perform at a superhuman level.

At Zetamotion, this principle is built into the Spectron platform. With just a single onboarding scan, it can create the equivalent of millions of training examples, without manual labeling or months of setup. That is how we help manufacturers cut through the noise, moving from trial and error to reliable inspection that scales across products and environments.

Closing Thought

The truth is that conventional AI inspection systems do not fail because engineers are not skilled enough. They fail because the approach itself is not scalable. Synthetic data changes that equation, making it possible to achieve accuracy at speed, without drowning in manual effort.

If you are curious about how synthetic data can help you escape the data bottleneck, start with our guide on Synthetic Data for Quality Inspection.